Some deliveries are just boxes. This one was a supercomputer.

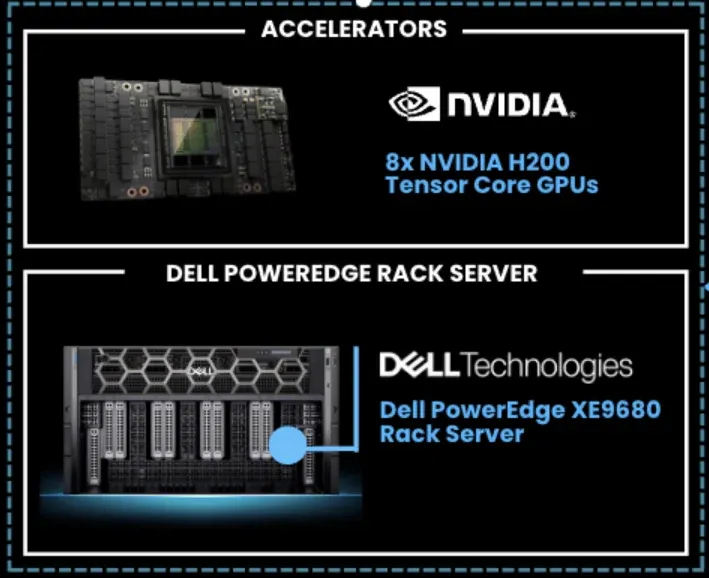

We unboxed and rolled in the Dell PowerEdge XE9680 — yeah, the same monster machine that’s powering Elon Musk’s Grok supercomputer.

Not one, not two, but a whole cluster of them is heading straight into a customer’s data center in Qatar, and we’re the lucky ones who get to build and play with it along the way.

And let us tell you — it’s not every day you get to work with what’s basically the fastest AI server in the world.

Why We’re Losing Our Minds Over This

These aren’t just servers. They’re the backbone of Grok — the AI system that everyone’s talking about. If Grok is the celebrity, then the XE9680 is the star-studded stage it performs on.

Inside? You’ll find NVIDIA’s H200 GPUs, the heavyweights of AI acceleration.

Think: more memory, more speed, more raw brainpower than most people will ever need in a lifetime.

Training large language models? Easy. Running generative AI? Smooth. Scaling clusters that feel more like mini supercomputers?

That’s literally what they’re built for.

And here’s the part that gives us goosebumps: this isn’t just happening in Silicon Valley or inside Musk’s labs — we’re setting this up right here in Qatar.

Building the Cluster (aka Our Playground)

We’re not just dropping hardware off at the doorstep. Nope. We’re:

-

Clustering multiple Dell PowerEdge XE9680s into a beast of a system that can scale like few servers on the planet.

-

Configuring and integrating NVIDIA H200 Tensor Core GPUs, each packing massive high-bandwidth memory (HBM3e) and designed to accelerate the largest AI models out there.

-

Helping our customer tap into the same firepower Grok is running on the kind of setup built for training giant LLMs, generative AI workloads, and advanced simulations.

Honestly? It’s a mix of serious engineering and pure kid-in-a-candy-store vibes.

Our team’s having a blast unboxing, installing, and fine-tuning these machines.

There’s something surreal about sliding an XE9680 with eight dual-width GPUs, liquid cooling, and 400Gb networking —into a rack, realizing this setup could chew through workloads that push the limits of AI today.

Why This Matters (to Us, and to You)

Sure, delivering one of the fastest AI servers in the world is a milestone for our company. But it’s also a sign of what’s next.

If Qatar can run Grok-level hardware in its own data centers, what’s stopping anyone else?

From finance to healthcare to research labs this is the kind of infrastructure that unlocks the future of AI.

And we’re here to deliver it, configure it, and make sure it works like magic.

At the end of the day, we’re just excited. Excited to play with it. Excited to deliver it. And excited to share that the future of AI doesn’t just live in California and it’s being built right here, too.

Stay tuned. This is only the beginning.