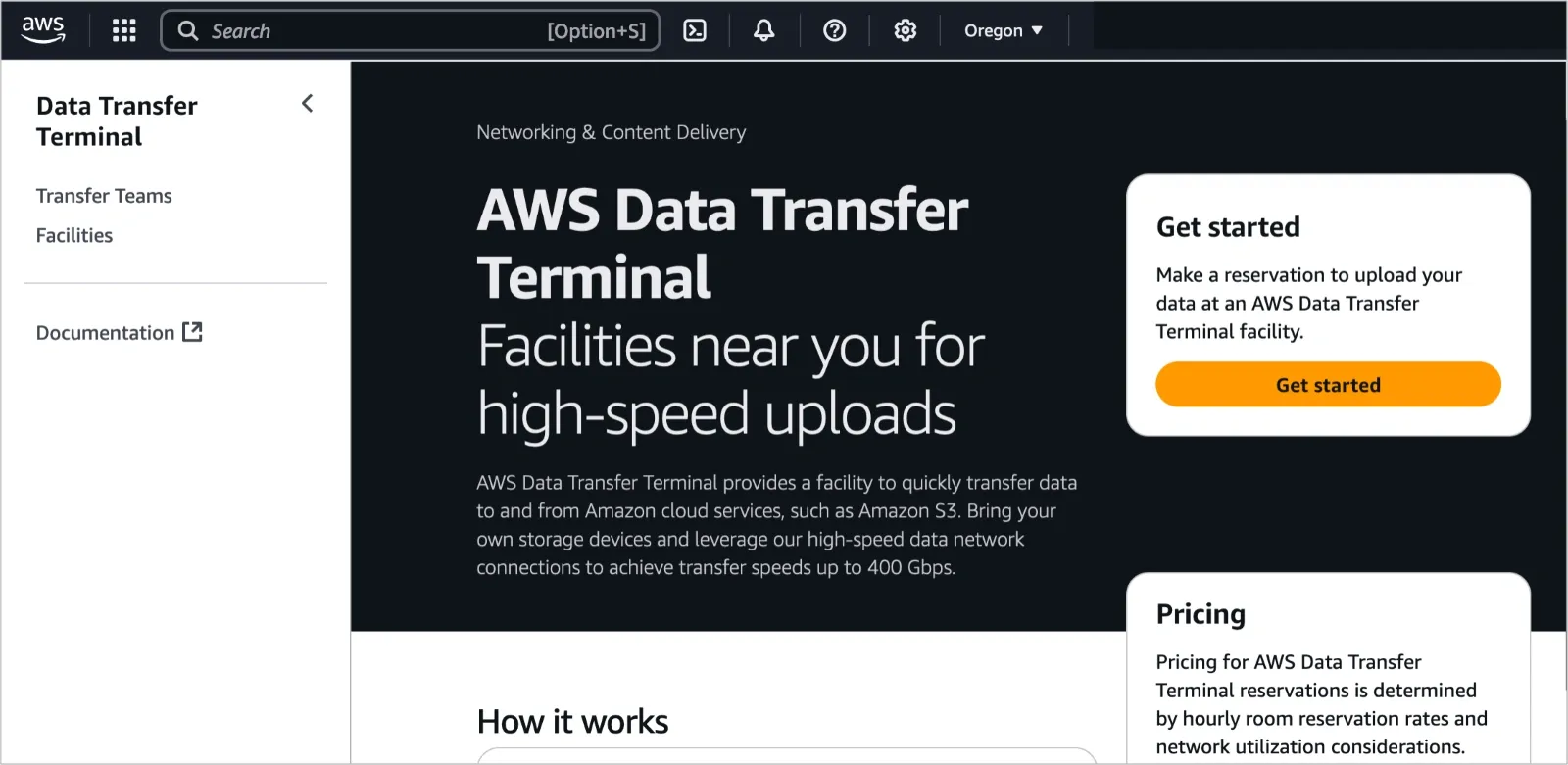

During the very much-awaited re:Invent 2024, Amazon Web Services (AWS) officially came up with its latest offering aimed to smooth migration into the cloud: Data Transfer Terminals (DTT).

These innovative facilities allow customers to transfer data directly from their in-house storage devices to the cloud with high-speed connections via the familiar protocols of S3 and Amazon Elastic File System.

Starting with two initial locations in Los Angeles and New York, AWS's DTTs are designed for scenarios where massive datasets need to be transferred quickly and securely.

Think media production houses, advanced automotive training systems, legacy financial services, or even industrial equipment generating streams of sensor data—industries where time is money, and delays are costly.

What Makes DTTs Unique?

Unlike traditional data transfer methods, the DTT scheme eliminates the days or weeks often required to ship physical storage devices to AWS for upload.

Customers can now reserve a time slot at a DTT facility, bring their storage device, and upload their data to AWS's cloud infrastructure at high-speed uplinks—reported to feature at least two 100G optical fiber connections.

AWS positions this as a game-changing solution for industries handling terabytes or petabytes of data that need to be moved efficiently.

By reserving and scheduling upload windows via the AWS Console, customers can transfer their data "within minutes" instead of enduring long shipping or transfer delays.

How the Process Works

Here’s a simplified step-by-step of what the Data Transfer Terminal process looks like:

- Reservation: Customers use the DTT console to choose a location, date, time, and duration for their data transfer session.

- Access and Authorization: Customers select authorized users to access the terminal, and AWS also provides instructions on how to access the facility.

- Uploading: Customers connect their prepared storage device to the DTT with 100G LR4 QSFP transceivers and other recommended hardware.

- Processing: Once data is in the cloud of AWS, then processes can be carried out instantly by such tools as Amazon Athena for analytics, SageMaker for machine learning, and EC2 for customized applications.

High-Throughput Uplinks

This is a high-performance AWS offer that is provided due to the DTT's twin 100G fiber-optic connectivity to the AWS cloud network.

The actual upload speed is yet not announced, but it has every feature of a high-throughput infrastructure that suits data-intensive industry requirements.

In order to optimize this, the customer will use the following;

- 100G LR4 QSFP transceivers

- Active IP configuration via DHCP

- Latest software and drivers

Complement to the Other Existing AWS Services

DTT is a supplement of existing Snowball and Direct Connect from AWS. This while the former requires sending of storage device to the data uploading on AWS, with Direct Connect that offers constant and low-latency links DTT is only specifically meant for bulk transfers whenever the client wishes.

AWS expects DTT facilities to be an integral resource in its large regions around the world, making it possible for even more customers to easily move large datasets.

Pricing and Availability

Reservations for DTT are charged in port-hours, with pricing determined by network utilization. Although specific pricing details will be released shortly, AWS claims that this solution is more cost-effective than traditional transfer methods.

The Future of Data Migration

AWS's Data Transfer Terminals are the next big leap for businesses to move large datasets swiftly and securely.

With promises of reducing upload times and immediate data processing capabilities, DTTs could become a must-have for industries that require agility and speed.

As AWS continues to expand the DTT network, this innovation could redefine how enterprises approach large-scale data migration, bringing their data to the cloud faster than ever before.